|

|

Project developed at the ENS Paris-Saclay, Centre Borelli

and accepted at the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Project developed at the ENS Paris-Saclay, Centre Borelli

and accepted at the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). |

|

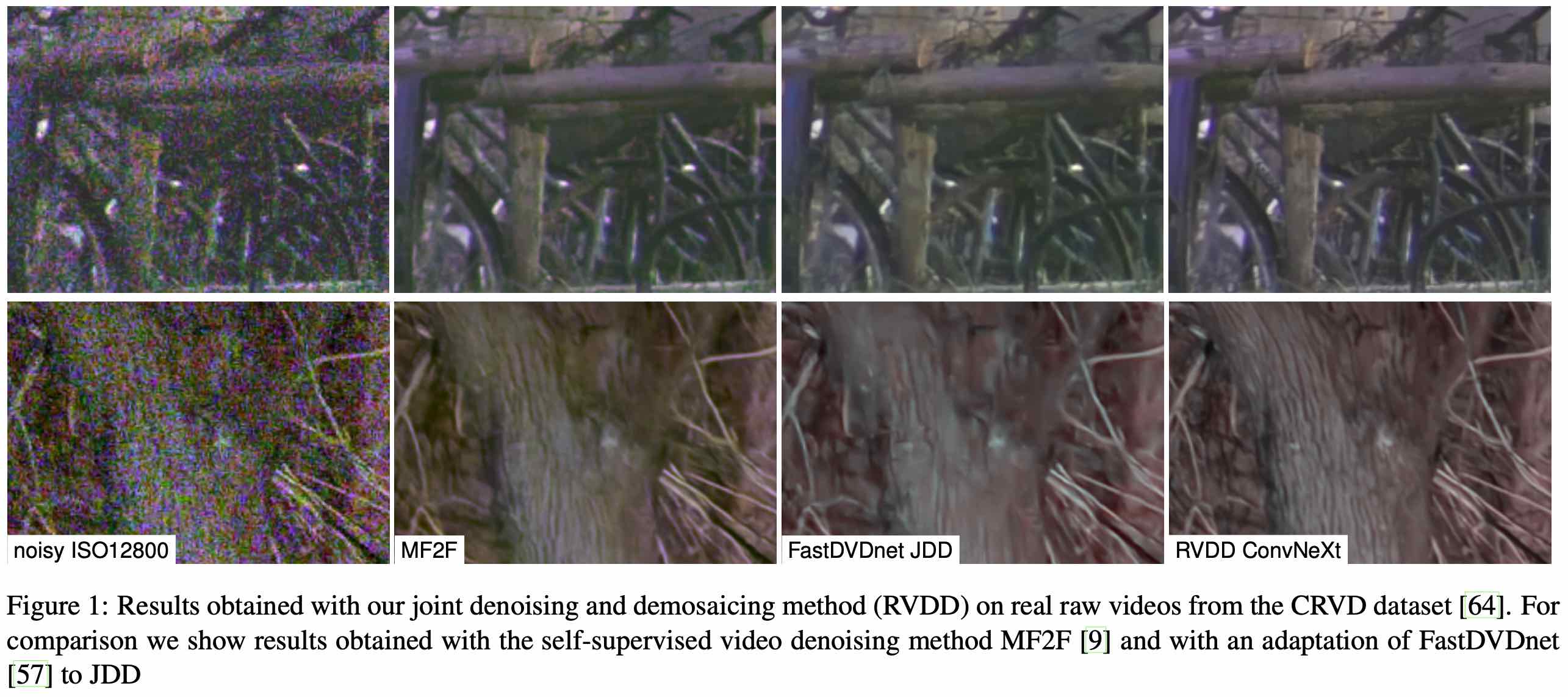

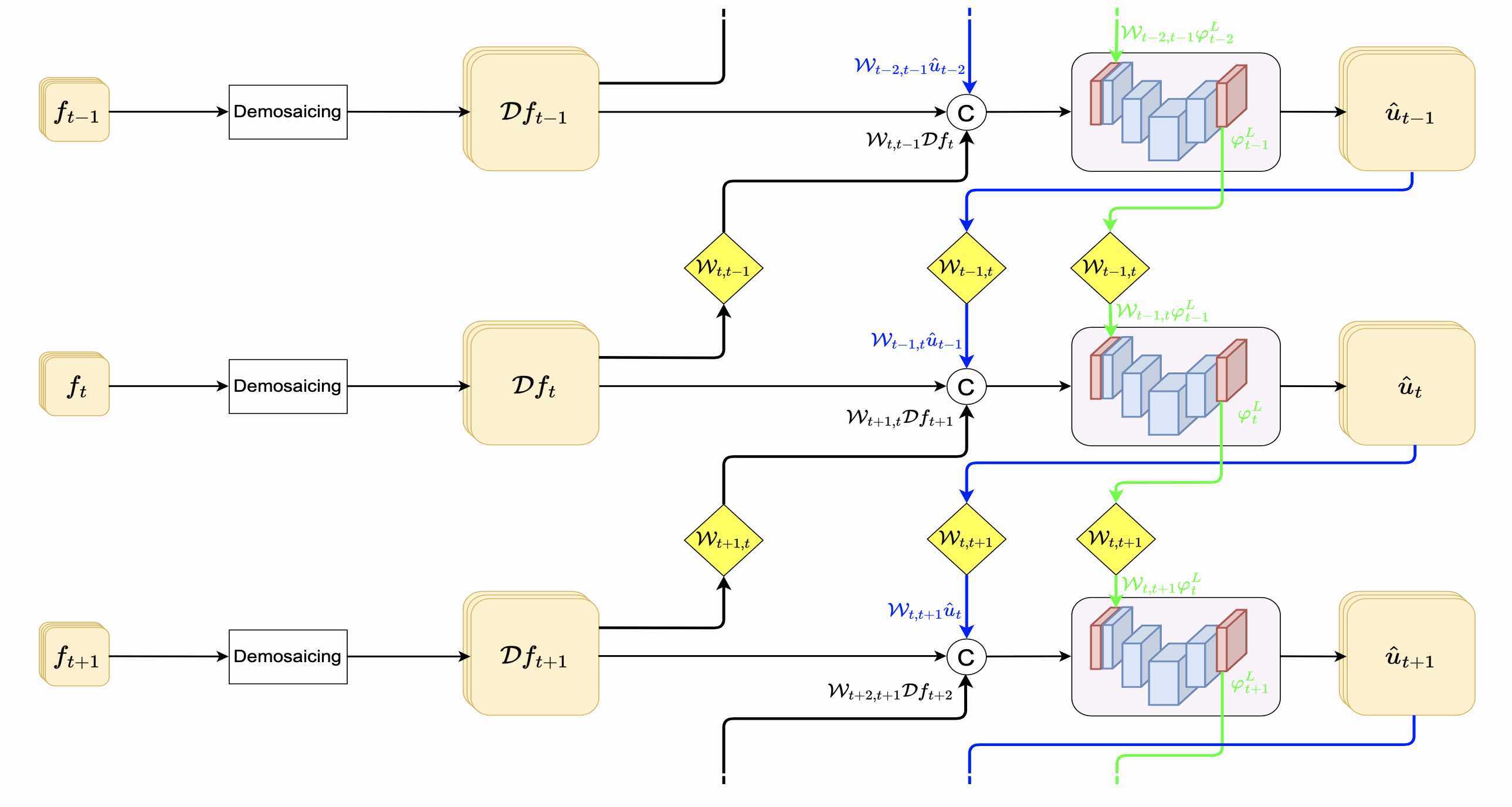

Denoising and demosaicing are two critical components of the image/video processing pipeline. While historically these two tasks have mainly been considered separately, current neural network approaches allow to obtain state-of-the-art results by treating them jointly. However, most existing research focuses in single image or burst joint denoising and demosaicing (JDD). Although related to burst JDD, video JDD deserves its own treatment. In this work we present an empirical exploration of different design aspects of video joint denoising and demosaicing using neural networks. We compare recurrent and non-recurrent approaches and explore aspects such as type of propagated information in recurrent networks, motion compensation, video stabilization, and network architecture. We found that recurrent networks with motion compensation achieve best results. Our work should serve as a strong baseline for future research in video JDD. |

|

| Description. Recurrent video joint denoising & demosaicing architecture in the RGB domain. Data inputs and outputs are represented as colored rounded squares. Small squares represent the packed raw frames whereas large squares represent RGB frames. |

| Description. TODO |

|

V. Dewil, A. Courtois, M. Rodriguez, N. Brandonisio, D. Bujoreanu, G. Facciolo, P. Arias Video joint denoising and demosaicing with recurrent CNNs In WACV, 2023. (hosted on ArXiv) |

Acknowledgements |